The Transformer Notes

Table of contents

Introduction

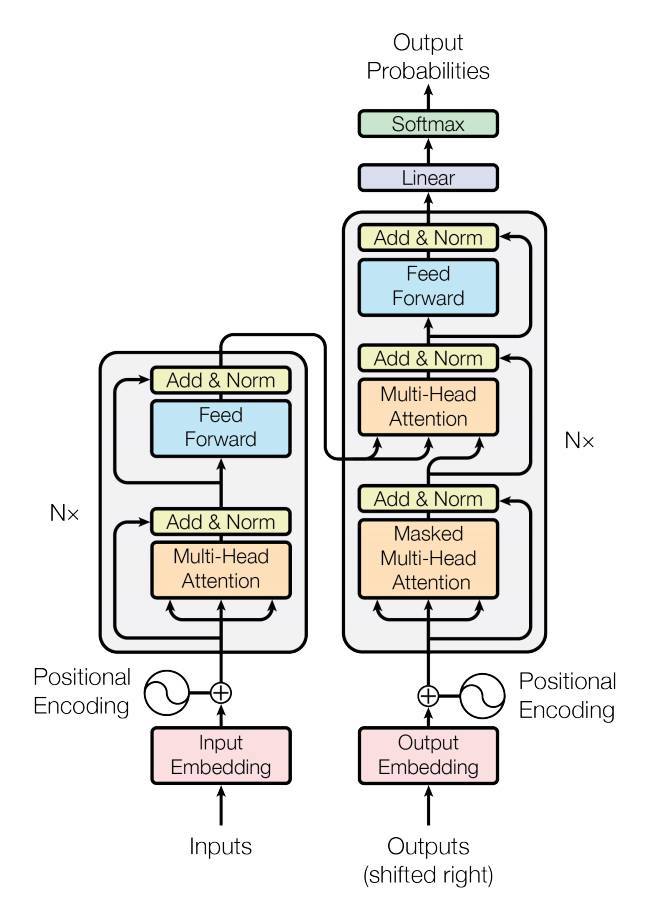

Figure 1: Transformer architecture

Figure 1: Transformer architecture

Main components include:

- Positional Encoder

- Transformer Encoder

- Transformer Decoder

- Word (Dictionary) Embedding

Positional Encoder

Transformer Encoder

Transformer Decoder

Word Embedding

Other Operators

References

- Deisenroth, M. P., Faisal, A. A., & Ong, C. S. (2020). Mathematics for Machine Learning. Cambridge University Press. https://doi.org/10.1017/9781108679930

- Klein, G., Kim, Y., Deng, Y., Senellart, J., & Rush, A. M. (2021). The Annotated Transformer. https://nlp.seas.harvard.edu/2018/04/03/attention.html[Online; accessed 2023-04-04]